In 1968, in a novel that was to provide the framework for the film Blade Runner, the writer Philip K. Dick wondered whether robots would one day become sufficiently evolved to need to dream. Today, in his laboratory, the U2IS of ENSTA Paris, Gianni Franchi would like artificial intelligence to learn to doubt its certainties.

In a paper recently accepted at the 11th International Conference on Learning Representations (ICLR, May 1-5, 2023), Gianni Franchi highlighted the unreasonable certainty of artificial neural networks when asked to assess the relevance of their results, and considered different ways to remedy this.

"Take the case of a neural network trained to recognize animals: cats, dogs, birds, it is unbeatable. But that's all it can do. If it is ever presented with an image of a car, there is a good chance that it will say that it is a dog, because it is the species with the greatest intrinsic morphological variability. For the network, the car will be a new type of dog, with round legs. And when asked how confident it is in this classification, it will give a score of 90%!"

This anecdote highlights the fact that neural networks are currently unable to model the limits of their knowledge. If the previous example makes us smile, it raises a problem that could be much more serious in another context.

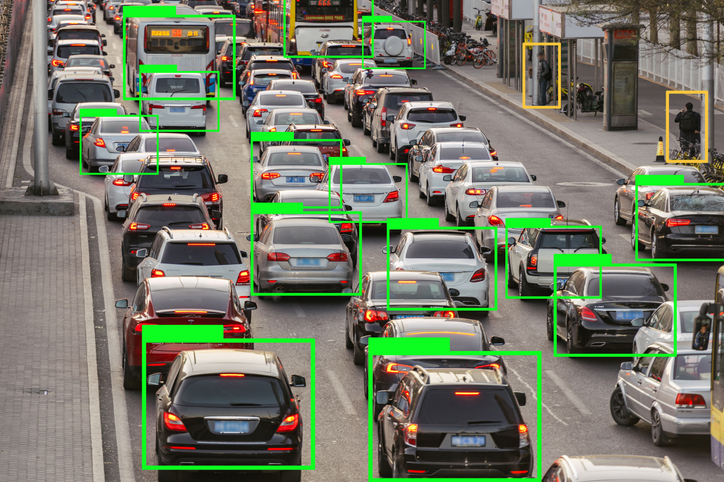

"Now imagine the reverse situation: that same neural network trained to recognize road signs, cars and common obstacles, and installed in an autonomous vehicle. A wild boar appears at the bend in a country road, a class of object it has never seen before. The risk is great that he will interpret it neither as a tree nor as a pedestrian, and let the vehicle continue its way towards this object that does not fit into his categories, and therefore does not exist for him."

To remedy this deficient management of uncertainty, there are avenues of research. "The most promising is that of assembly techniques," says Gianni Franchi. "They consist of using several expert neural networks in parallel, and checking that their results are consistent. But putting them into practice raises many difficulties linked to the subtleties of information theory."

Another disadvantage of this approach is that it is prohibitively expensive, since many expert neural networks must be trained simultaneously.

"With a few students, and this is the experiment I will describe at the ICLR conference, I have managed to train much simpler networks, consisting of fewer neurons, but which can approximate a much larger network. When we look at the set of predictions of all these small networks, their predictions turn out to be of quite comparable quality to those of much larger networks. It would be very beneficial to use them in the case of autonomous vehicles, where there is a lot of data to analyze while having limited computational resources."

Of course, ideally, each artificial neural network managing a crucial task should have some form of awareness of the fallibility of its results by modeling this uncertainty.

"I'm working on it," confides Gianni Franchi, without revealing for the moment which avenues he is thinking about, because he intends to present them at the next edition of the International Conference on Learning Representations (ICLR) in 2024. "The acceptance rate of papers is very low, around 20%. And in the papers that are accepted, 60% come from groups that have much larger resources than ours... But as the acceptance of our paper by the 2023 ICLR just demonstrated, innovative work still manages to break through. You have to be creative."

One day, in order to be more impartial, it may be artificial intelligences that will decide on the topics to be discussed at such conferences. Let's hope that on that day, Gianni Franchi's work will have given neural networks enough critical thinking skills to give a fairer reflection of the reality of academic research on artificial intelligence.